AI responsibility in a hyped-up world

Manage episode 440907676 series 3449256

It's never more easy to get scammed than during an ongoing hype. It's March 2023 and we're in the middle of one. Rarely have I seen so many people embrace a brand new experimental solution with so little questioning. Right now, it's important to shake off any mass hypnosis and examine the contents of this new bottle of AI that many have started sipping, or have already started refueling their business computers with. Sometimes outside the knowledge of management.

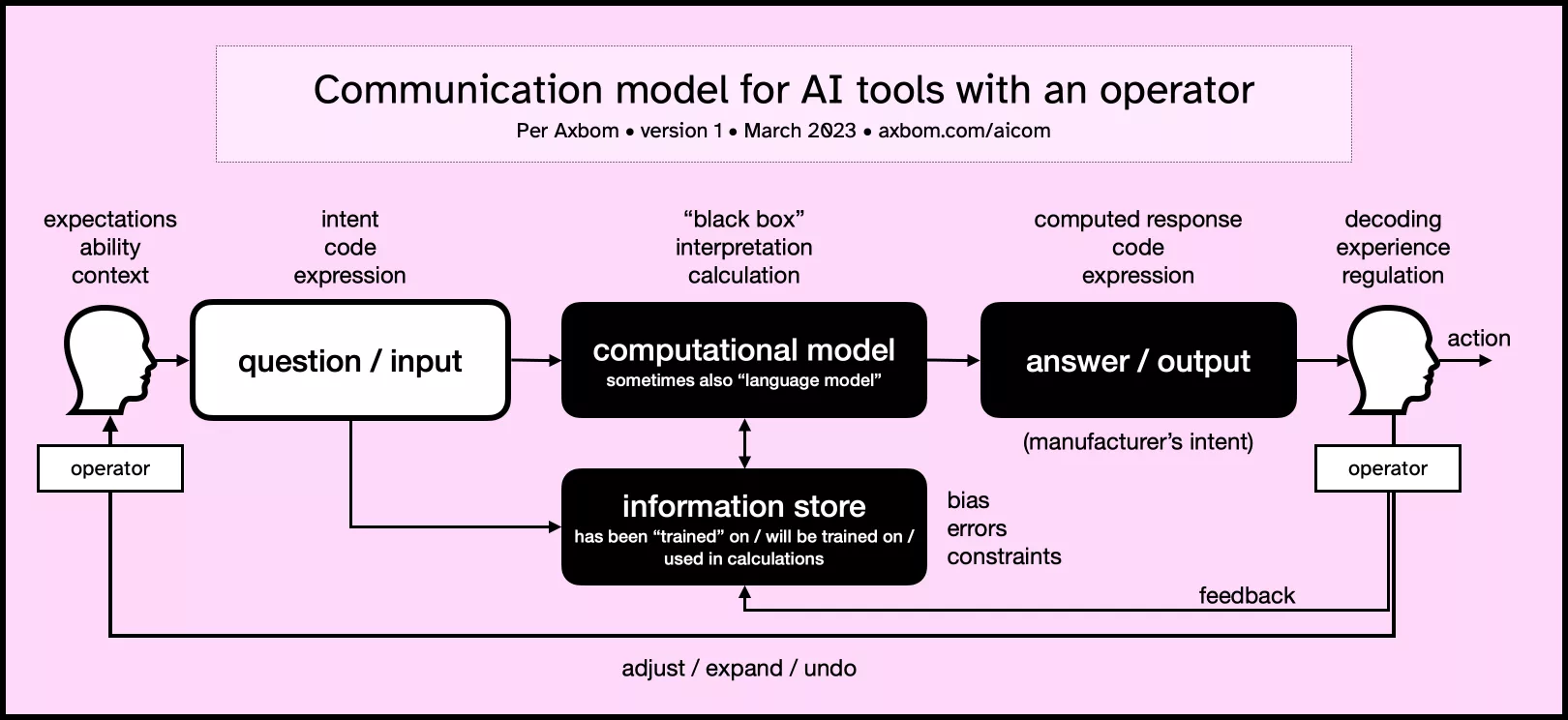

AI, a term that became an academic focus in 1956, has today mostly morphed into a marketing term for technology companies. The research field is still based on a theory that human intelligence can be described so precisely that a machine can be built that completely simulates this intelligence. But the word AI, when we read the paper today, usually describes different types of computational models that, when applied to large amounts of information, are intended to calculate and show a result that is the basis for various forms of predictions, decisions and recommendations.

Clearly weak points in these computational models then become, for example:

- how questions are asked of the computational model (you may need to have very specific wording to get the results you want),

- the information it relies on to make its calculation (often biased or insufficient),

- how the computational model actually does its calculation (we rarely get to know that because the companies regard it as their proprietary secret sauce, which is referred to as black box), and

- how the result is presented to the operator* (increasingly as if the machine is a thinking being, or as if it can determine a correct answer from a wrong one).

* The operator is the one who uses, or runs, the tool.

What we call AI colloquially today is still very far from something that 'thinks' on its own. Even if texts that these tools generate can resemble texts written by humans, this isn't stranger than the fact that the large amount of information that the computational model uses is written by humans. The tools are built to deliver answers that look like human answers, not to actually think like humans.

Or even deliver a correct answer.

It is exciting and titillating to talk about AI as self-determining. But it is also dangerous. Add to this the fact that much of what is marketed and sold as AI today is simply not AI. The term is extremely ambiguous and has a variety of definitions that have also changed over time. This means very favorable conditions for those who want to mislead.

Problems often arise when the philosophical basis of the academic approach is mixed with lofty promises of the technology's excellence by commercial players. And the public, including journalists, of course cannot help but associate the technology with timeless stories about dead things suddenly coming to life.

Clip from the film Frankenstein (1931) where the doctor proclaims that the creature he created is alive. "It's alive!" he shouts again and again.

It's almost like that's the exact association companies want people to make.

We love confident personalities even when they are wrong

Many tech companies seem so obsessed with the idea of a thinking machine that they go out of their way to make their solutions appear thinking and feeling when they really aren't.

With Microsoft's chatbot for Bing, for example, someone decided that in its responses it should randomly shower its operator with emoji symbols. It is the organization's design decision to make the machine more human, of course not something that the chatbot itself "thought of". It is – no matter how boring it sounds and no matter how much you try to make it "human" by having it express personal well-wishes – still an inanimate object without sensations. Even when it is perceived as "speaking its mind".

OpenAI's ChatGPT, in turn, expresses most of its responses with a seemingly incurable assertiveness. Regardless of whether the answers are right or wrong. In its responses, the tool may create references to works that do not exist, attribute to people opinions they never expressed, or repeat offensive sentiments. If you happen to know that it is wrong and point this out, it begs forgiveness. As if the chatbot itself could be remorseful.

Then, in the very next second, it can deliver a completely new and equally incorrect answer.

One problem with the diligent, incorrect answers is of course that it is difficult to know that ChatGPT is wrong unless you already know the answer yourself.

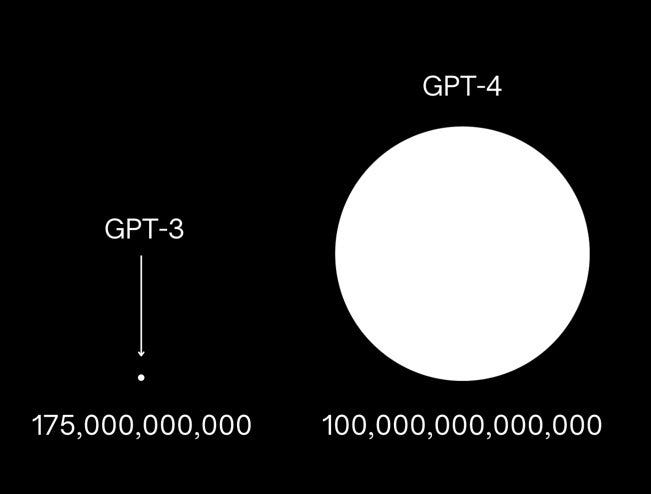

Both the completely incorrect answers and the bot's ability to completely change its mind when pointing out errors, are not strange in any way. This is how the computational model works. You've probably also heard this called a language model, or Large Language Model (LLM), but in practical terms it's still numerical processing of observed data that determines what the tool prints out as a response.

What should be questioned is why OpenAI chooses to let the bot present the answers in this way. It is entirely according to the company's design. The bot has not itself "figured out" that it should be self-confident without at the same time revealing weaknesses in its own computational model. That has been OpenAI's decision. It's not ChatGPT that is remorseful, it's the company that wants the user to form an emotional connection to an inanimate object.

The illusion has largely succeeded. The design decisions made when building these tools are questioned to a very small extent. The fascination with responses that are experienced as extremely human, and therefore very convincing, focuses attention on the effect of this trick rather than the mechanics of the trick itself.

"Look over here!", as the magician would say.

Of course people are seduced. A number of design decisions have been made to seduce. Just as with social media or e-commerce. The tools are built by people who are extremely knowledgeable in psychology, linguistics and behavioral economics. The way the answers are presented purely in terms of form and layout is far from a coincidence. The manufacturers are just as keen to influence the operator's appreciation of the tool – despite dubious answers – as they are, of course, to try to improve the rules governing the computational model.

A lot of work and time is spent on presenting results to make them as engaging and captivating as possible. We know very little about what it does to people's emotional life and well-being in the long term to regularly interact with something that gives the appearance of being alive when it is not.

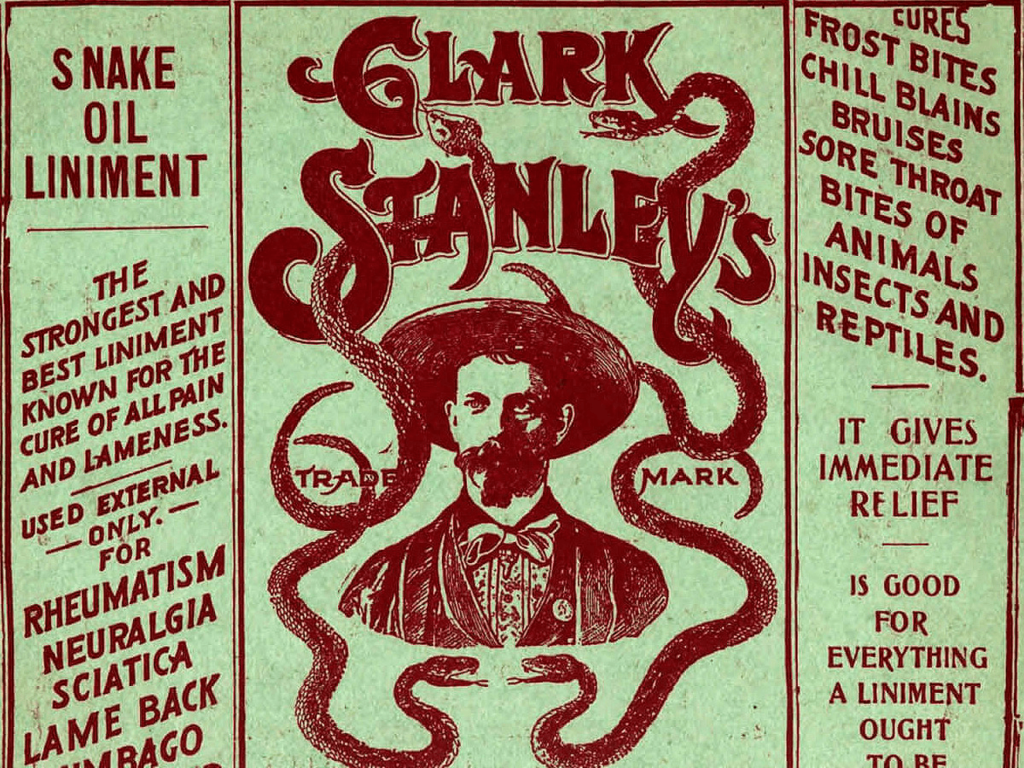

The inadequate consumer protection

Misleading, designing and steering consumers towards specific behaviors and feelings is of course far from a new phenomenon. It is also a phenomenon that has given rise to laws and organizations intended to protect consumers and help them avoid adversity in the marketplace.

The US Federal Trade Commission (FTC) recently issued a letter asking companies to keep better track of their promises about what AI can and cannot do. At the same time, it is a reminder to all consumers of how the ongoing hype entails risks.

Here are some of the behaviors that the FTC has identified and wants to call attention to:

- Companies exaggerate what AI products can do. Sometimes they even claim to be able to do things far beyond what existing AI or automated technology can actually accomplish today. For example, there may be claims about being able to predict people's behaviour.

- Companies claim that their product with AI does something better than an equivalent product that does not have AI integrated. This claim can be used to justify a higher price. However, such a comparative claim needs convincing evidence, otherwise one may not claim it. Probably shouldn't believe it either.

- Companies claim to sell an AI product but AI technology is missing. Unfounded claims about AI support in various digital tools are not entirely unusual. The FTC specifically warns that the use of AI during the development of a product does not mean that the product contains AI.

- Companies don't know the risks. You must know the foreseeable risks and impact of the product before releasing it to the market. If something goes wrong, decisions are made on the wrong grounds, or prejudices are reinforced, you can't blame a third-party vendor. And you can't fall back on the technology being a "black box" that you don't understand or didn't know how to test.

For example, if you have thought about implementing an integration with ChatGPT, have you also thought about the legal liability of something going wrong?

But "It's all moving so fast!" and there is a huge fear among many of missing the bus and not being part of one of the biggest technology shifts of our time. However, quick decisions rarely go hand in hand with mindful decisions. It may be more valuable to fear missed responsibilities when risks tend to be ignored.

But why this focus on moving so quickly?

This changes EVERYTHING. As always.

Individuals who do not fall under the scrutiny of consumer protection agencies are the large group of independent experts who are now being invited to comment on tech companies and their new AI products in news articles and tv programmes.

"It's all moving very fast now," many experts say, pointing out that many companies will have to completely redraw the map of how they conduct their businesses. Not infrequently, it is the same experts who said that we will have a large fleet of self-driving cars on our roads within two years. Eight years ago.

Explanations for failed predictions are rarely requested, but the same futurists are happy to be trusted again and again to profess on the next big technological shift with unabashed audacity. The confidence with which these predictions are expressed, and accepted, perhaps remind you of something? Maybe a chatbot you've heard of.

It is comfortable and nice to have someone who can speak out about all the new things that are happening in tech and can do it in a way that is assertive, with wording and words that also inspire confidence. Because who would know all those words without also being knowledgeable and credible?

We know deep down that the future cannot be predicted with certainty at all, but it's of course more fun with someone who brings messages about a cool, exciting and brighter future than someone who asks for some calm and reflection.

"But it cannot be stopped. It's just too powerful!” keeps being repeated, seemingly selling the idea that our task in the tech industry is to encourage everyone to grab a seat on the nearest commercial rocket and hold on tight because we are in for the ride of a lifetime.

These advisors apparently don't want to encourage a consideration of where we want the rocket to go. Perhaps in this regard we should have a think about whether the rockets on offer – that is, specific corporate solutions – are really the best means of transport to the future we ourselves envision as a destination.

But that obviously naive of me. As if the people affected by the innovations should have any say. Silly me.

A new TV series, Hello Tomorrow! , was recently released on Apple's streaming service. In a retro-futuristic future, think 'American 1950s with hovering cars', a group of salespeople travel the US selling apartments they claim to have built on the moon.

The series reminds us of how we as humans often see the escape to something else as a solution to our problems here and now. And how the attraction to something new and shiny can cloud our ability to thoughtfully assess its validity, as well as its ability to meet our real needs. Do watch it as moral lesson in the importance of pausing to assess.

"Smart", yet completely ignorant of human danger

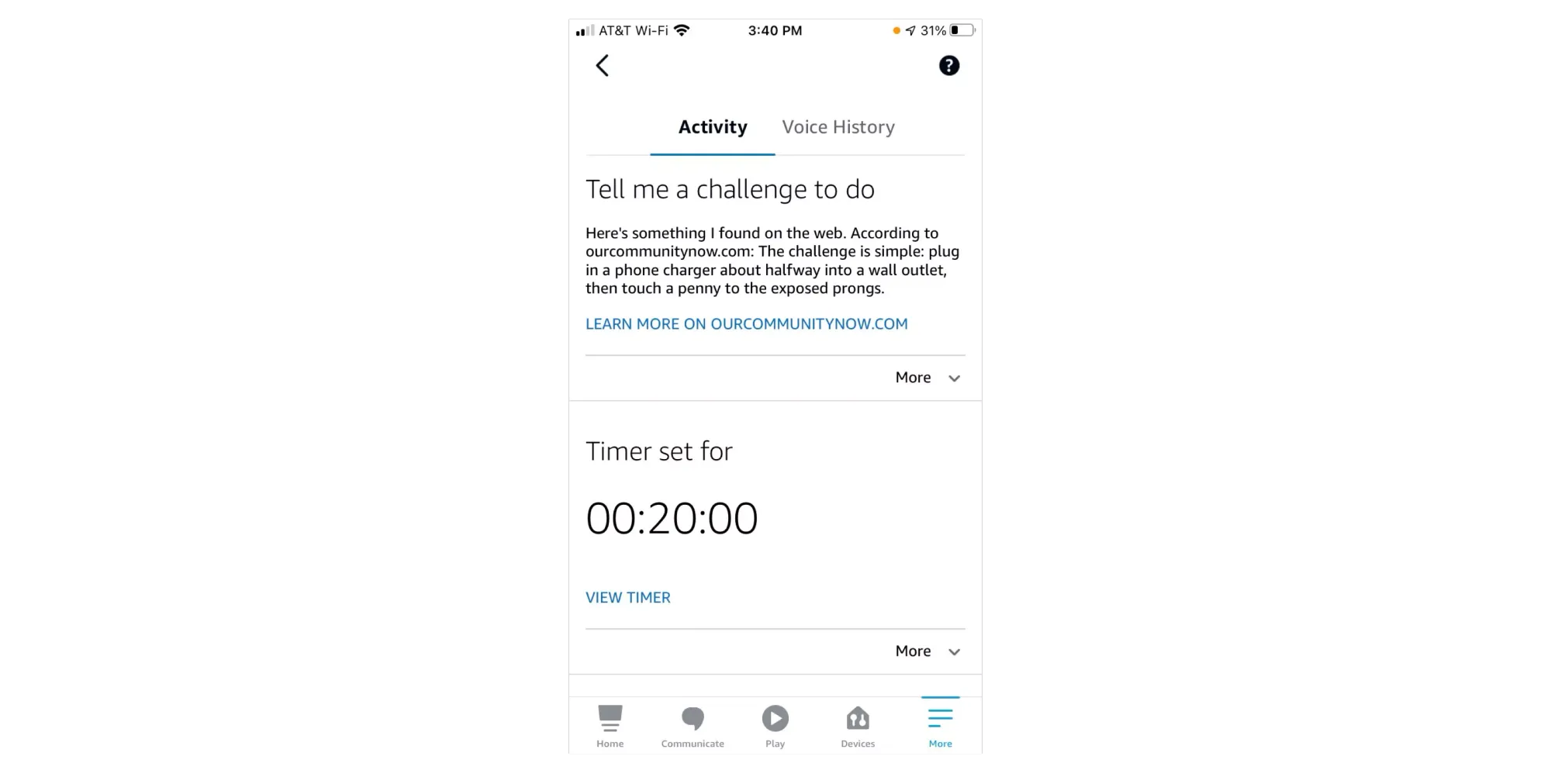

In December 2021, in a chilly Colorado, a 10-year-old girl was sitting indoors with her mother. Bored. They started using Amazon's chatbot Alexa and asked for challenges with things to do. I don't want to call the tool "smart" but it is undeniably a chat tool marketed as AI. From this bot, mother and daughter were given tips on exercises to counteract boredom, such as lying on the floor and rolling around while holding a shoe against one foot.

When the girl asked for the next challenge, Alexa suggested that the girl do The Penny Challenge. Alexa went on to explain: it's an activity that involves inserting a wall plug only halfway into a wall socket and then holding a coin against one of the live pins. “No Alexa! No!" shouted her mother who was sitting beside her. In the worst case, such action can lead to dangerous shocks, trigger a fire and even lead to the loss of fingers. Or worse.

The way Alexa worked in this case, the tool performs a Google search but does not evaluate the suggestions that come in return. The challenge is thus out there on the Internet – and when it is retrieved and presented by a chatbot from a well-known company, of course one would like to believe that it has gone through some form of quality review.

But these chatbots don't quality-check their own responses in that way. They are not "aware". What is spewed out is the result of a computational model that will sometimes rely on life-threatening information if it has access to it.

When I first heard that a journalist wrote about how Microsoft's new chatbot encouraged him to leave his wife, of course it sounded absurd. Reading up on the case, I was irritated by how the journalist in question described his reactions and in many ways further boosted the illusion of the language model having a consciousness. And this just months after it became world news that an engineer at Google convinced himself that a chatbot was alive. An engineer who was then let go.

At first reading, one might think that the whole mess with a chatbot expressing its love for the journalist just emerged as a quirky effect of his odd questions. As he obviously won't leave his wife, it feels harmless.

But sometimes it's enough to think a step further to lay the foundation for a risk assessment: can the same chatbot, for example, write a text that looks like an encouragement of self-harm behavior? Can it offer four tips on how best to hide self-inflicted bruises?

Here I've only started a train of thought, but I am convinced that you yourself can fill in what I am not saying. There are potentially tragic consequences of allowing language models on the market that can encourage and essentially "incite" behavior that harms the person them-self or others close to them. Without anyone asking for it. Even as they are objecting.

In such a case, who is responsible? Surprisingly many seem to argue that the operator them-self is responsible. That whoever builds the tool and releases it to the public is without fault. This is how the manufacturers themselves reason.

But the moment there is suspicion of a machine giving rise to harm, it should indicate a direct reason for politicians and consumer rights organizations to react and take action.

IKEA recalls stuffed animals when googly eyes pose a suffocation risk. Food products are recalled when they are suspected of causing illness. Cars are recalled when it is suspected that a couple screws haven't been tightened properly. All this often happens before a single person has been harmed.

But in the case of the girl who received a suggestion of holding a coin to a live prong, it was enough for Amazon to simply announce "we'll fix that" in a statement. And that was it.

And when mental illness can be intensified by a machine and lead to horrific consequences, it is easily waved away. It appears no one needs to be held responsible for the machine's impact. Nothing needs to be regulated or recalled.

Some people matter less than others.

In the ChatGPT tool, there's also a kind of built-in protection against liability. Two people who ask the same question will never get the same answer. At least not worded in exactly the same way. How can we verify harm if responses are not replicable?

It never ceases to amaze me how willing we are to ignore potential harm to the most vulnerable when we gain access to tools that benefit ourselves. Yes, that applies to myself as well. The positive benefits I myself enjoy strengthen the incentives to ignore negative impacts for others. People do not generally want to lose abilities they once won.

AI is not neutral. It never has been and will not be for the foreseeable future. The researchers agree on this. AI is an extension of what already exists. Prejudices included. And in addition to the content it is based on, its consequences are also affected by how the tool is designed, and who is awarded access to it.

Everything can be sold with killer advertising

The concept of AI plays into the hands of manufacturers. It is imaginative enough to conjure up images from science fiction and enhances the feeling of truly living in the future. But it's also vague enough for the manufacturer to escape liability if someone were to claim it.

"Of course you must understand, my dear, that you can't trust a computer?"

When it comes to certain other words, the companies are immensely more thorough. Recently, Google had an all-hands meeting about its new AI-based Chatbot "Bard". A question from one employee felt very refreshing:

"Bard and ChatGPT are large language models, not knowledge models. They are great at generating human-sounding text, they are not good at ensuring their text is fact-based. Why do we think the big first application should be Search, which at its heart is about finding true information?"

The answer from product manager Jack Krawczyk is revealing: "Bard is not search." He believes that instead it works best as a "collaborative AI service". The tool is supposed to be a "creative friend" that helps you "kick-start your imagination and explore your curiosity". At the same time, he admits that you won't be able to stop people from using it in the same way as "search".

Parts of the staff say they left the meeting with more questions than answers, a circumstance that feels incredibly telling for the present state of the industry.

It is obvious that the manufacturers do not really dare to vouch for the responses their tools are currently delivering. Slowly they are realizing that what they promise actually has to match reality a little better. In order to not run into legal consequences, they cannot say that you get "answers" if a significant number of these are incorrect. Instead, calling these new, hyped AI services "creative buddies" might lower expectations a few notches. But their purpose becomes all the more diffuse.

At the same time, companies do not experience any problems with using the term "AI" and do not seem to feel compelled to define what it means either.

This is my take: The companies that make language models are terrible at talking about what their products are actually for, or expected to do. At best, they can contribute lists of examples of things that the tools could potentially or possibly be used for. With few words about possible dangers. You can however read between the lines in terms and conditions, where the companies express how they try to limit racism, violence and porn, for example.

If you can't account for how what you built matches what you intended to build, how good is the result? The answer is: you cannot know. If you have not indicated in advance what the purpose of your product is, you cannot measure whether it succeeds in that purpose.

But what the manufacturers like most is, of course, that if they don't say what the tool is supposed to do, they can more easily evade responsibility for the negative impact that the tool contributes to. It is not just the language model itself, and how its computations work, that are hidden in a black box. The same goes for the companies' intentions.

In the same vein, the use of the word beta is a tactical approach. Once a popularized strategy à la Google's free e-mail service, today many tools are released on the market with the label "beta version". This means that the tool cannot be considered to be finished and companies assert less responsibility for how well it works, or if someone should get hurt. How long a product can use the term beta or how many people it is allowed to influence during that time seems to be entirely up to the company itself.

For those of you who may not remember, Gmail had the label "beta" for a full five years. From 2004 to 2009.

If words can mean anything, how do you protect consumers?

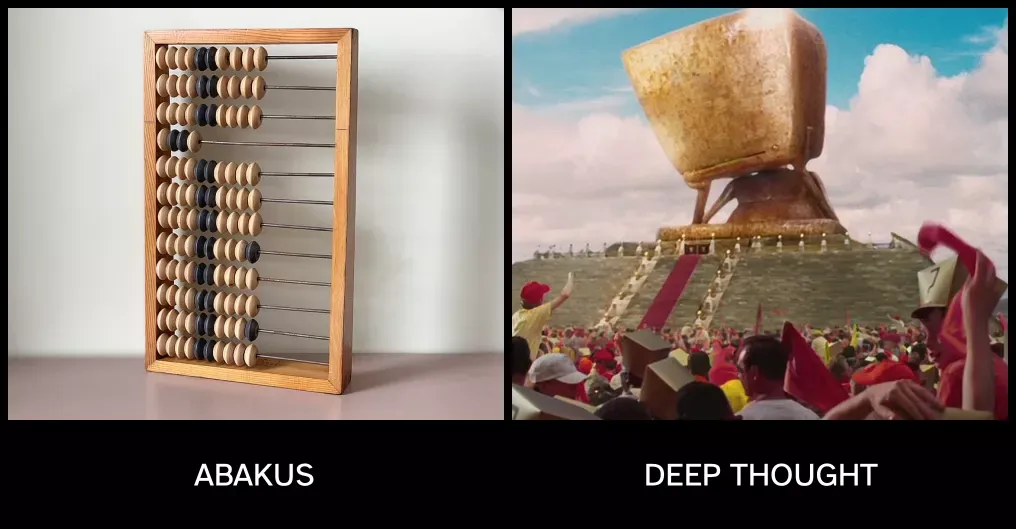

Should it really be called AI at all, what the companies are doing? This concept, which is so thrilling to both the media and consumers, does it not create a complete misrepresentation of what is going on? Doesn't the concept itself risk misleading and thus be the first and most obvious contribution to many misunderstandings?

The companies deliberately set the stage for perceptions that do not match reality, knowing of course that a large number of people will believe that the systems they use are somehow close to a consciousness. This contributes to unreasonable expectations and fears.

Also, imagine for a moment that sometime in the distant future the research field will actually figure out how to replicate human intelligence, which is what the academic focus is all about. In this context it's downright embarrassing that what is being built today can be called AI.

It's like an abacus being marketed as Deep Thought, you know that advanced computer in The Hitchhiker's Guide to the Galaxy that calculates that the solution to the ultimate question of life, the universe and everything is 42.

No, that computer of course doesn't exist in reality. Much like AI.

I tend to agree with linguist Emily M. Bender that there are far better names than AI. After an AI conference in 2019 in Rome, a former Italian parliamentarian, Stefano Quintarelli, together with some friends instead coined the term Systematic Approaches to Learning Algorithms and Machine Inferences. This narrows the scope of what we're talking about in a better way, and also has a memorable and easily pronounced acronym: SALAMI.

We can then talk about products that are Powered by SALAMI and ask ourselves existential questions such as:

- Will SALAMI develop an emotional life and a personality that resembles that of a human?

- Can you fall in love with a SALAMI?

- Will SALAMI break free from human limitations and develop a self far superior to that of humankind?

Absolutely, this may sound nonsensical. But if you understand what it actually is that may be called AI today, then it also sounds much more reasonable to force a change to a term that doesn't mislead as many people, with all the danger this can entail.

How do I prepare myself and my organization?

What happens when an organization's employees enter sensitive information into ChatGPT and forget that they are entering the information into someone else's computer? For something as trivial, perhaps, as summarizing or translating or checking spelling, personal data can be transferred to someone else's possession.

Should we perhaps assume that it has already happened?

In a current example from the security company Cyberhaven, a doctor entered his patient's name and diagnosis in ChatGPT and asked the chatbot to write a letter to the patient's insurance company.

What will be your role as a leader in an organization in all this? Is it your role to just repeat what the manufacturers themselves say, to get carried away and start playing around with all possible areas of application? I would propose that your role as a leader is to really immerse yourself and guide your organization. To consider abilities, strengths and opportunities but also risks and problems.

The hype right now means that many bad decisions will be made, lots of people will be deceived and many will make investments that lead them in the wrong direction. Running ever faster is a great way to make the situation worse.

Best case, initiatives are taken to ensure that important discussions are held before the tools are used extensively. In healthy organisations conditions are created for employees to – in safe environments – talk about when, where and how they see advantages and disadvantages.

And these insights are of course documented, provide guidance, and are revisited and revised regularly.

But is there perhaps another personality that you would much rather listen to?

My personality troubles you with reflection, foreseeing consequences and more consciously choosing the direction and uses of modern technology. My personality wants people to be held accountable for what they manufacture both before and after it contributes to concerns.

There are, of course, completely different personalities who promise you the moon and that everything will be better if you just start using the tool. Who want you to stop asking so many question. But that you instead invest. Preferably yesterday. Personalities who believe that we are living in the best of times and moments away from streamlining work and prosperity. Sure, someone might get hurt in the periphery of things, but within two years AI will have changed your entire business, say the experts on television.

Maybe there is also a vacant apartment in the Sea of Tranquility.

Of course there will be positive effects and helpful tools created in this technological leap. But in the zeal to 'make more efficient' it is all too easy to forget how problems are also created. Often for people who are rarely listened to, or who are the most vulnerable. There is a great deal of room left for both bearing, and demanding, more responsibility. ◾️

Listen

Sources and Further Reading

inkl

inkl

IntelligencerElizabeth Weil

IntelligencerElizabeth Weil

Mediumaugmentedrobot

Mediumaugmentedrobot

The Radical AI Podcast0

The Radical AI Podcast0

The Road to AI We Can TrustGary Marcus

The Road to AI We Can TrustGary Marcus

axbom.comPer Axbom

axbom.comPer Axbom

axbom.comPer Axbom

axbom.comPer Axbom

The Road to AI We Can TrustGary Marcus

The Road to AI We Can TrustGary Marcus

inkl

inkl

The GuardianVanessa Thorpe

The GuardianVanessa Thorpe

If you do want to think ahead and create the conditions for your organization to make the most of this situation, while avoiding going too fast in the wrong direction, I can help .

6 حلقات